Lies, Damned Lies and Sloppy Statistical Analysis Make for Bad Journalism

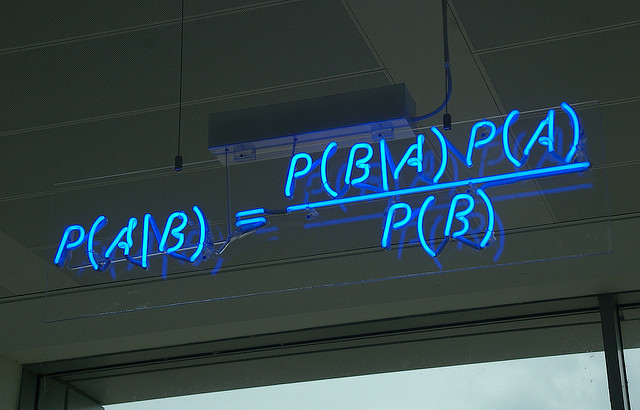

Bayes’ theorem, central to probability theory and statistics. Photo: Matt Buck / Flickr

By Rithy Odom

To help science journalists better navigate the morass of statistics that supposedly hold discrete findings to some standard of reliability or truth, organizers of a World Conference of Science Journalists 2017 panel on separating statistical fact from fiction played to a full house of science communicators on 28 October.

In the session, “There is a 95% Chance You Should Attend This Session on Statistics,” seasoned journalists and statisticians demystified the art of accurately parsing numbers for the sake of journalistic accuracy.

When it comes to numbers, “Common sense can help you avoid statistical pitfalls in science reporting,” said Kristin Sainani, associate professor of health research and policy at Stanford University. She suggested several guiding principles for journalists who routinely face statistical findings head-on.

"You should not blindly accept the authors’ take on their data."

The numbers can sometimes be crushing to those unfamiliar with statistics, causing many writers to relent instead of understanding and verifying.

When statistics are overwhelming, Sainani said, it is not uncommon for science writers to uncritically submit to the conclusions provided by scientists. And the problem, she asserted, can be equally confounding for researchers themselves.

Her advice: “You should not blindly accept the authors’ take on their data, and don’t be afraid to ask basic questions if the simple numbers don’t match with the conclusion of the paper.”

She emphasized, too, that journalists should look for pictures or graphics to help explain numbers because they can effectively help translate what the data mean.

Writers also need to pay attention to baseline numbers such as sample size. “Always ask how big is the effect, not just if it was statistically significant,” Sainani said, and consider the totality of evidence, not just one measure.

Seeking more evidence

Christie Aschwanden, lead science writer for the online news and analysis site FiveThirtyEight, echoed Sainani’s advice about drawing conclusions from all available evidence instead of relying on a single study or finding.

As an example of the danger of relying on a single data set, Aschwanden referenced a study by 29 teams composed of 61 analysts tasked to parse data on whether soccer referees give more red cards to dark-skinned players than light-skinned ones. The result was that three separate conclusions could be arrived at using the same data.

This points to a major pitfall. Journalists sometimes select only a single data source or study to suggest something may be true in an article, ignoring other data sets, which might otherwise provide insight into what they actually mean. As Aschwanden said, “[When] you put these all together, you are getting a better answer with the aggregate than any single one.”

Tips for the wary and the weary

With years of experience writing for major publications like The New York Times, Discover, and Popular Science, she shared a few guidelines to help science journalists do a better job of interpreting scientific results and conclusions.

A valuable bit of advice, she said, is that writers should always emphasize that uncertainty is acceptable when it comes to science. Writers should strive to avoid creating the impression that a discrete finding is an absolute truth, and instead look for other evidence or studies to inform their stories.

"Every time you frame something, you need to check other evidence.”

Andrew Gelman, a professor of statistics and political science at Columbia University, said that cognitive bias, or systemic errors in thinking or perception, is another common problem among those who grapple with the statistical conclusions of a study.

“Even statisticians continue to wrestle or argue with [bias], or even get it wrong,” he explained.

In that regard, Aschwanden added, “Every time you frame something, you need to check other evidence.”

She also suggested that writers should be aware of motivated reasoning and other cognitive biases by asking, “How could you be wrong? “How does this all go together?”

In short, panelists all made the case that better science journalism results when one questions the statistical conclusions and methodologies. Look beyond the abstract and the press release, read the methods section of the paper, look at sample size, and don’t take conclusions in isolation as scientific gospel.

—

Rithy Odom is a student of media and communication and a graduate from the Department of International Studies, Royal University of Phnom Penh, Cambodia. He has internship experience with the Voice of America and was a member to the U.S. Ambassador Youth Council and Young Southeast Asian Leaders Initiative.